AI, Ethics & Leadership in the New World

“Morality in everyday life is ‘messy’. We are not all bad. And we are not all good. Sometimes we are vicious. Sometimes, purely because of the scent of cinnamon buns in the mall, we are generous.” —Dr. WHu

So what!

Leadership today is grounded even more on moral authority, in addition to organizational authority and abidance by laws and regulations. In a 24-7 connected world, the mere perception of non-ethical conduct can cause irreparable damage to reputations of corporates or individuals.

Autonomous machines, such as companion robots, autonomous vehicles, autonomous vacuum cleaners, and hospitality-industry concierge robots are becoming part of our society. They interact with human beings in close quarters constantly. We need Isaac Asimov’s Robotics Laws for that robot in our kitchen.

To do this, we need to first examine what is ethics and morality, in the eyes of human beings. On June 20, 2003, a runaway string of 31 unmanned Union Pacific freight cars, carrying 3800 tons of lumber and building materials, was hurtling towards the Union Pacific yards in Los Angeles. The dispatcher thought a Metrolink passenger train was in the yards. The dispatcher ordered the shunting of the runaway cars to a new track, Track 4: a track leading to an area with lower density housing of mostly lower income residents. Track 4 was rated for 15mph transits, much lower than the transit speed the cars were at. Derailment occurred. A pregnant woman in the lower income neighborhood narrowly escaped death.

Was the dispatcher’s decision ethical? What would you do if you were the dispatcher? If the Metrolink train was in the yard and had only one passenger, would you have shunted the cars? What if there were 15 passengers in the Metrolink train, would you have decided differently?

Philosophers, moral psychologist, decision science researchers, and business ethicists have examined various facets of this case. Difficulty of ethical decision making then and there was exacerbated by the ‘fog of decision’ due to incomplete set of necessary data, the unknown knock-on effects, and the ambiguous guideline in this scenario. A decision had to be made, under risk and under uncertainty.

The dispatcher, and decision makers in other difficult situations, can use an AI ethic engine, which in theory can do unemotional button pushing, if and only if it has been trained by sets of unbiased data and given a set of clear and non-contradictory ethical guidelines. The advantage is not that AI engines will necessarily deliver optimal ethical solution. Instead, cognitive biases inherent among human beings are removed and the society has one less factor of concern. In fact, surveys have shown that people are more willing to accept decisions made by machines than by other human beings.

Leaders and followers want to be on righteous missions. Remember “Don’t Be Evil”? The challenge is what is evil, or not evil, under what set of often impossible-to-know nuanced facts at decision time. Furthermore, the projected outcome can only be best described as probabilistic due to unknown factors and beyond decision makers’ control.

An autonomous vehicle industry needs its AI system to decide when faced with the following scenario: two kids suddenly dashed to the front of the vehicle, ignoring the vehicle’s warning signals. If there was one person on the right, should the rule be to veer right and likely kill that one person? What should be the ethical rule if there were three persons on the right? If all three were members of a violent gang, should the vehicle ram towards them? In the last scenario, the mug shots in visual database were useful data points in AI’s decision-making. However, what if the three were on their way to a local school as reformed role models to teach kids what not to do?

Morality in everyday life, according to empirical studies by moral psychologists and philosophers, is “messy”. We are not all bad. And we are not all good, regardless of whether we are religious or not. Sometimes we are vicious. Sometimes, purely because of the scent of cinnamon buns in the mall, we are generous.

Morality in the abstract, a topic since the dawn of human civilization, is equally messy. Deontology moral philosophers, such as Immanuel Kant, believe that there are absolute rights or wrongs. “Thou shall not steal”, sanctity of human lives, etc. Utilitarian moral philosophers, such as Jeremy Bentham, believe that moral decisions and actions should be taken for the most positive outcome for the society.

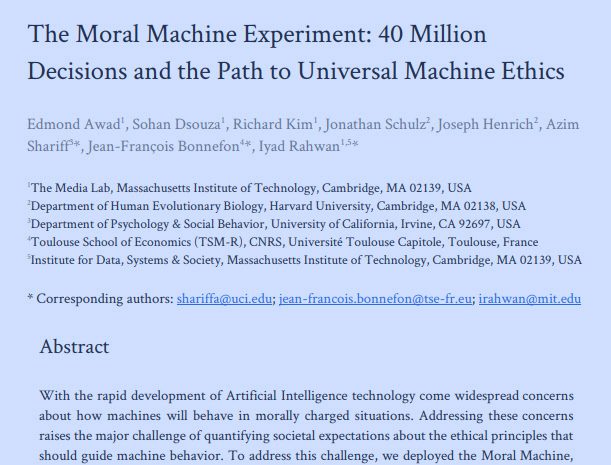

MIT researchers conducted an experiment on a website called The Moral Machine. Millions of people from over 233 countries and territories provided over 40 million decisions on the moral dilemmas the autonomous vehicles face. One of the findings is that people are overwhelmingly utilitarian moralists; if the situation is unavoidable, the vehicle should veer right to kill two, not left to kill three, so to speak.

However, people will not buy a vehicle programmed with utilitarian morality if that means the vehicle will ram into the center divider, harming the lone driver inside the vehicle. The life inside the car is deontologically precious according to self-proclaiming utilitarian drivers.

The same tension between deontological and utilitarian ethics exists in leadership decisions on privacy. For example, as a CEO, you are tasked to know as much as you can about your customers as they have told you to ‘get them’; yet sometimes they think your getting-to-know-them algorithm is ‘creepy.’ Same tension exists on matters of security, safety, medical resource allocations, etc.

Moral philosophers examined two great classic virtue theorists: Aristotle and Confucius. The multi-dimensionality of human morality is such that Mencius, a disciple of Confucius, believed that human nature is benevolent, and educations is the best way to bring out that benevolent nature. Xunzi, also a Confucius disciple, argued instead that people’s nature is bad; therefore, law should be enforced according to legalists after Xunzi. In the Confucian classic Grand Learning 大學; a Confucian gentleman should first observe in person carefully and deliberate without self-delusion; from which, he achieves (4) 正心 (zengxing; moral thinking and ethical behavior) and (5) 修身 (xiushen; self-cultivation for civil conducts). Only after having achieved these, a gentleman can attempt to govern his family (6) 齊家(qijia), and then to administer a state (7) 治國 (zhiguo). Aristotle emphasized in Nicomachean Ethics, Book 2, that the man who possesses character excellence will tend to do the right thing, at the right time, and in the right way.

Not only did classic Aristotelian and Confucian think that self-cultivation for the character is foundational towards a civil society in which all members benefit, but also many Judeo-Christian and religious thinkers in various denominations.

Some AI ethics engine developers, in their efforts for AI codes to serve the society, feel that we need, a priori, a set of ethical guidelines from the society, as in constructing the ethics algorithm for autonomous vehicles. If the society is incapable of providing agreed-on ethical guidelines, the autonomous vehicle industry will suffer from the conflicting nature of human morality and leaves the society devoid of many benefits this industry can offer.

We can, however, ascend beyond these binary, and somewhat impoverished, moral choices by not ignoring what classic virtue philosophers had observed and commented. We start by developing the characters of our citizens, who will strive to learn and to be selfless, empathic, impartial, and decent. They think about benevolence towards not only one’s own family and tribes but also other families and tribes — the greater good. They fail often, but they pursue these goals both with vigor and with temperance, also both with creativity and with discipline. They are determined ethic agents.

In AI technology, we can program algorithmic ethic agents. Their goals are for the greater good. They aim to optimize a mathematical utility function dealing with uncertainties in both causalities and outcomes and aggregating ‘soft’ ethical principles and ‘hard’ safety constrains from all ethical agents. These agents are man and autonomous machine working together in collective. It can use what is called the GAI network, a method for decision making under risk and uncertainty, to elicit and represent ethical principles and safety constraints from all agents.

AI ethics engines then optimize the utility function and provides a statistically optimal solution, rationally and consistent with von Neumann & Morgenstern (VNM) utility axiom.

On the other hand, human beings often behave sub-optimal or irrational in decision making. We human beings, with wet yet incredibly efficient neural networks, still need to work through uniquely human issues: for example, what is considered ethical by one “tribe” may be considered non-ethical by another “tribe”. The same tribe may change its opinion within 10 years on the same set of facts. As a biological species, homo sapiens has survived and thrived after countless years’ fighting against evolutionary odds. Could it be that the ultimate goal of morality and ethics be the continued survival of our species?

From the viewpoints of both neuroscience and AI technology, normative moral and civics education are efficient in populating ethic individuals with excellent characters in our society. Today and tomorrow, our societies depend on them to deal with current and emerging ‘messy’ morality issues.

Education itself enhances the plasticity and the creativity with which human brains are endowed. Excellence in both teaching and learning continues to be homo sapiens secret to beating the evolutionary odds: we are incomparable in passing on and adding to cumulative knowledge and techno-cultural anticraft from one generation to the next, including leadership knowledge, grounded on ethics, for the greater good of the human being.

See more of Dr. WHu:

The Dr. WHu Sampler

How do we get to next? Dr. Albert Hu navigates the crosscurrents of artificial intelligence and machine learning (AI/ML).