Is 3D Machine Vision Right for Vision-Guided Robotics?

OnRobot’s new 2.5D product release Eyes, and others, seem to be saying not so fast

A misstep or brilliant

Simplicity never goes out of style. How did Einstein put it: “Everything should be made as simple as possible, but not simpler.” Could OnRobot’s new product release, the 2.5D Eyes robot camera, be cut from the same cloth?

OnRobot’s Eyes is a robot vision system that isn’t 2D or 3D, but 2.5D. In our 3-dimensional world where every part or work product has 3 dimensions, it seems natural to give a robot 3D sight with which to plainly see the height, width and depth of any and all parts.

For a decade or more, the vision-guided robotics herd (think, Cognex, Basler, Zivid, Photoneo, etc.) has been tramping out a 3D trail as automation’s vision solution to almost everything, even for the elusive bin picking problem. Did this smallish gripper/EOAT manufacturer, OnRobot, misstep by investing in the development of 2.5D vision technology, instead of 3D?

Basically, 2D machine vision sees only height and width; 3D sees all three; 2.5D, on the other hand, sees what 2D sees, but also offers just enough of 3D’s depth perception to make it quite handy at picking and placing random parts or finished products or even emptying bins.

The bin-emptying problem

For as long as robotics has tried to master the art of emptying bins, complexity—expensive complexity, at that—has been its constant companion. Giving a robot eyes and a little bit of direction about what and how to pick has arrived. Vision Guided Robotics (VGR) is what it’s called, but as high-end 3D machine vision, the tech is very pricey. Basic 3D VGR starter systems can begin at $40k, making them more expensive than the cobots they direct.

“2.5D is rapidly emerging as the perfect technology for vision-guided applications,” claims OnRobot’s CEO, Enrico Krog Iversen. “Compared to 2D it adds not only length and width but also height information for the specific part, which is ideal when objects may vary in height or if objects must be stacked.”

Emptying bins is a universal need in manufacturing and logistics, but when solutions become complex and expensive, the addressable marketplace for pricey picking gear shrinks considerably. Especially so in the budget-sensitive workplaces of SMEs.

With the World Bank reporting that SMEs represent over 90 percent of global businesses, it would seem that OnRobot’s Eyes, and its friendlier cost of entry, was definitely planned to take such a massive marketplace into account.

There’s market demand for machine vision that is forecast to grow quickly over the next five years.

According to research firm Markets & Markets: “The global machine vision market size is expected to grow from $10.7 billion in 2020 to $14.7 billion by 2025, at a CAGR of 6.5 percent during the forecast period. The major driving factors in the market are the increasing need for quality inspection and automation, growing demand for vision-guided robotic systems, and increasing adoption of vision-guided robotic systems.”

Grandview Research is a bit more generous in its projection with $18.24 billion by 2025 (CAGR: 7.7 percent).

And while we’re scaling backwards to 2.5D, let’s not knock 2D, either. Recognition Robotics (Elyria, Ohio) uses a simple 2D camera in conjunction with its CortexRecognition software that is capable of guiding an industrial robot in a full 6 degrees of freedom (X, Y, Z, Rx, Ry, Rz).

“The real groundbreaking and unique thing here,” says the company’s founder and CEO, Simon Melikian, “is that with a single image from a 2D camera, we are telling the robot what the object is and where it is in space in 3D, or six degrees of freedom.”

Interestingly, what Melikian has done with a 2D camera—and OnRobot with its 2.5D Eyes—is to create 3D VGR. In short, equal horsepower with far less complexity and at a much lower price point.

Melikian further claims: “The algorithms are based on the human cognitive ability to recognize objects. When I developed these algorithms, I was mimicking the human visual cortex. We’ve used knowledge about human brain function, the point of view of the human eye, and put this into the software realm.” (see video)

Seems there’s a problem with 3D imaging

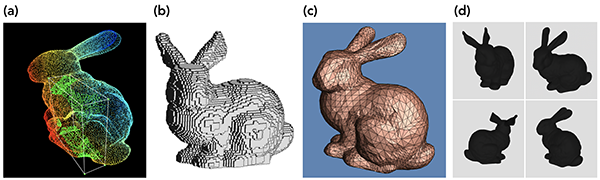

Here’s the 3D imaging output from four different 3D images of the same object; the surface detail and resolution of these images is not very good.

Surface resolution of 3D images is not very good; good as in 3D imaging cannot be used for close surface inspection for anomalies, imperfections and defects in, say, a part exiting from a CNC machine. 3D imaging will detect gross imperfections like those for part height or width or a missing piece, for example, but close-ups are a challenge.

Here’s how the authors explain it all: “Now that you’ve turned your 3D data into a digestible format [any of the four rabbit photos], you need to actually build out a computer vision pipeline to understand it. The problem here is that extending traditional deep learning techniques that work well on 2D images (like CNNs [Convolutional Neural Networks]) to operate on 3D data can be tricky depending on the representation of that data, making conventional tasks like object detection or segmentation challenging.”

A quick call to a 3D machine vision leader generally confirmed the sum and substance of what the Stanford researchers reported. A spokesperson there suggested that if high-res surface QCing is needed, then 2D VGR would be in order.

Subsequent outreach to Neurala, which bills itself as “dedicated to solving your hardest visual inspection challenges using AI, whether your inspections are for manufacturing, robotics, or drone applications,” turned out to be an ultra-brief exchange. “We don’t use 3D images,” came the quick reply. End of story.

Circling back

OnRobot’s first-ever launch into machine vision is simple, elegant and effective…and priced to please (although OnRobot refuses to disclose the asking price of their new gear, except to say that it’s “competitive”). And as for product design, once again—as with the company’s past two new product releases—OnRobot has another winning design. The design team knows how to make good tech look cool, which is usually a skillset resident only in Silicon Valley.

Iversen was quick to spread praise that the idea for Eyes sprang from OnRobot’s acquisition of Blue Workforce (Aalborg) and its tech team (2019). That’s less than one year from acquisition to new product launch, which says a lot about Iversen’s eye for acquisitions and his ability to seamlessly integrate new team members into the OnRobot flow.

See related:

“The Gripper Chronicles” Turns One

All-Out Collaboration: Any Cobot, Any Tool, Any Job

As The Gripper Chronicles turns one, we chat with OnRobot’s Enrico Krog Iversen about being the “everything” gripper/EOAT supplier for every cobot brand. Is it possible…and is it necessary?

The founder of Blue Workforce, by the way, and the guy who originally hired Iversen’s Eyes team, is one of robotics best and most interesting minds, Preben Hjørnet. Preben is now nearing the launch pad with The Gripper Company (yes, yet another Danish robot company arises from a country with barely 5.8 million citizens!).

…How come China has yet to acquire all of Denmark? Jus’ say’n.

When Iversen says “2.5D is rapidly emerging as the perfect technology for vision-guided applications,” he may well be onto something larger than OnRobot: a machine-vision (VGR) trend that’s going to get more attention very soon. A trend attractive enough to draw more tech interest and VC money; and maybe even notice from the brainy acquisition’s team at Teradyne (Chelmsford) that already has scooped up robotics tech notables Universal Robots, MiR (Mobile Industrial Robots), Energid, and AutoGuide Mobile Robots. OnRobot, a robot gripper company, might someday make for a nice complement to Teradyne’s existing robotics portfolio.

Add in Melikian’s algorithms for 2D machine vision and what he sees for the future of VGR, and suddenly the market forecasts from Markets & Markets and Grandview Research begin to look a bit on the low side.

What then of 3D? As Marc Andreessen famously predicted: “Software is eating the world.” We may be witnessing software’s next meal.

How did Einstein put it: “Everything should be made as simple as possible, but not simpler.”

See related:

The Gripper Chronicles

The Rise of the Smart Gripper

What next for the all-important, business end of every robot…the gripper?